-

March 17, 2025

Continuous Reproducibility: How DevOps principles could improve the speed and quality of scientific discovery

In the 21st century, the Agile and DevOps movements revolutionized software development, reducing waste, improving quality, enhancing innovation, and ultimately increasing the speed at which software products were brought into the world. At the same time, the pace of scientific innovation appears to have slowed [1], with many findings failing to replicate (validated in an end-to-end sense by reacquiring and reanalyzing raw data) or even reproduce (obtaining the same results by rerunning the same computational processes on the same input data). Science already borrows much from the software world in terms of tooling and best practices, which makes sense since nearly every scientific study involves computation, but there are still more yet to cross over.

Here I will focus on one set of practices in particular: those of Continuous Integration and Continuous Delivery (CI/CD). There has been some discussion about adapting these under the name Continuous Analysis [2], though since the concept extends beyond analysis and into generating other artifacts like figures and publications, here I will use the term Continuous Reproducibility (CR).

CI means that valuable changes to code are incorporated into a single source of truth, or “main branch,” as quickly as possible, resulting in a continuous flow of small changes rather than in larger, less frequent batches. CD means that these changes are accessible to the users as soon as possible, e.g., daily instead of quarterly or annual “big bang” releases.

CI/CD best practices ensure that software remains working and available while evolving, allowing the developers to feel safe and confident about their modifications. Similarly, CR would ensure the research project remains reproducible—its output artifacts like datasets, figures, slideshows, and publications, remain consistent with input data and process definitions—hypothetically allowing researchers to make changes more quickly and in smaller batches without fear of breaking anything.

But CI and CD did not crop up in isolation. They arose as part of a larger wave of changes in software development philosophy.

In its less mature era, software was built using the traditional waterfall project management methodology. This approach broke projects down into distinct phases or “stage gates,” e.g., market research, requirements gathering, design, implementation, testing, deployment, which were intended to be done in a linear sequence, each taking weeks or months to finish. The industry eventually realized that this only works well for projects with low uncertainty, i.e., those where the true requirements can easily be defined up front and no new knowledge is uncovered between phases. These situations are of course rare in both product development and science.

These days, in the software product world, all of the phases are happening continuously and in parallel. The best teams are deploying new code many times per day, because generally, the more iterations, the more successful the product.

But it’s only possible to do many iterations if cycle times can be shortened. In the old waterfall framework, full cycle times were on the order of months or even years. Large batches of work were transferred between different teams in the form of documentation—a heavy and sometimes ineffective communication mechanism. Further, the processes to test and release software were manual, which meant they could be tedious, expensive, and/or error prone, providing an incentive to do them less often.

One strategy that helped reduce iteration cycle time was to reduce communication overhead by combining development and operations teams (hence “DevOps”). This allowed individuals to simply talk to each other instead of handing off formal documentation. Another crucial tactic was the automation of test and release processes with CI/CD pipelines. Combined, these made it practical to incorporate fewer changes in each iteration, which helped to avoid mistakes and deliver value to users more quickly—a critical priority in a competitive marketplace.

I’ve heard DevOps described as “turning collaborators into contributors.” To achieve this, it’s important to minimize the amount of effort required to get set up to start working on a project. Since automated CI/CD pipelines typically run on fresh or mostly stateless virtual machines, setting up a development/test environment needs to be automated, e.g., with the help of containers and/or package managers. These pipelines then serve as continuously tested documentation, which can be much more reliable than a list of steps written in a README.

So how does this relate to research projects, and are there potential efficiency gains to be had if similar practices were to be adopted?

In research projects we certainly might find ourselves thinking in a waterfall mindset, with a natural inclination to work in distinct, siloed phases, e.g., planning, data collection, data analysis, figure generation, writing, peer review. But is a scientific study really best modeled as a waterfall process? Do we never, for example, need to return to data analysis after starting the writing or peer review?

Instead, we could think of a research project as one continuous iterative process. Writing can be done the entire time in small chunks. For example, we can start writing the introduction to our first paper and thesis from day one, as we do our literature review. The methods section of a paper can be written as part of planning an experiment, and updated with important details while carrying it out. Data analysis and visualization code can be written and tested before data is collected, then run during data collection as a quality check. Instead of thinking of the project as a set of decoupled sub-projects, each a big step done one after the other, we could think of the whole thing as one unit that evolves in small steps.

Similar to how software teams work, where an automated CD pipeline will build all artifacts, such as compiled binaries or minified web application code, and make them available to the users, we can build and deliver all of our research project artifacts each iteration with an automated pipeline, keeping them continuously reproducible. (Note that in this case “deliver” could mean to our internal team if we haven’t yet submitted to a journal.)

In any case, the correlation between more iterations and better outcomes appears to be mostly universal for all kinds of endeavors, so at the very least, we should look for behaviors that are hurting research project iteration cycle time. Here are a few DevOps-related ones I can think of:

Problem or task Slower, more error-prone solution ❌ Better solution ✅ Ensuring everyone on the team has access to the latest version of a file as soon as it is updated, and making them aware of the difference from the last version. Send an email to the whole team with the file and change summary attached every time a file changes. Use a single shared version-controlled repository for all files and treat this as the one source of truth. Updating all necessary figures and publications after changing data processing algorithms. Run downstream processes manually as needed, determining the sequence on a case-by-case basis. Use a pipeline system that tracks inputs and outputs and uses caching to skip unnecessary expensive steps, and can run them all with a single command. Ensuring the figures in a manuscript draft are up-to-date after changing a plotting script. Manually copy/import the figure files from an analytics app into a writing app. Edit the plotting scripts and manuscript files in the same app (e.g., VS Code) and keep them in the same repository. Update both with a single command. Showing the latest status of the project to all collaborators. Manually create a new slideshow for each update. Update a single working copy of the figures, manuscripts, and slides as the project progresses so anyone can view asynchronously. Ensuring all collaborators can contribute to all aspects of the project. Make certain tasks only able to be done by certain individuals on the team, and email each other feedback for updating these. Use a tool that automatically manages computational environments so it’s easy for anyone to get set up and run the pipeline. Or better, run the pipeline automatically with a CI/CD service like GitHub Actions. What do you think? Are you encountering these sorts of context switching and communication overhead losses in your own projects? Is it worth the effort to make a project continuously reproducible? I think it is, though I’m biased, since I’ve been working on tools to make it easier (Calkit; cf. this example CI/CD workflow).

One argument you might have against against adopting CR in your project is that you do very few “outer loop” iterations. That is, you are able to effectively work in phases so, e.g., siloing the writing away from the data visualization is not slowing you down. I would argue, however, that analyzing and visualizing data concurrently while it’s being collected is a great way to catch errors, and the earlier an error is caught, the cheaper it is to fix. If the paper is set up and ready to write during data collection, important details can make their way in directly, removing a potential source of error from transcribing lab notebooks.

--- title: Outer loop(s) --- flowchart LR A[collect data] --> B[analyze data] B --> C[visualize data] C --> D[write paper] D --> A C --> A D --> C D --> B C --> B B --> D B --> A--- title: Inner loop --- flowchart LR A[write] --> B[run] B --> C[review] C --> AUsing Calkit or a similar workflow like that of showyourwork, one can work on both outer and inner loop iterations in a single interface, e.g., VS Code, reducing context switching costs.

On the other hand, maybe the important cycle time(s) are not for iterations within a given study, but at a higher level—iterations between studies themselves. However, one could argue that delivering a fully reproducible project along with a paper provides a working template for the next study, effectively reducing that “outer outer loop” cycle time.

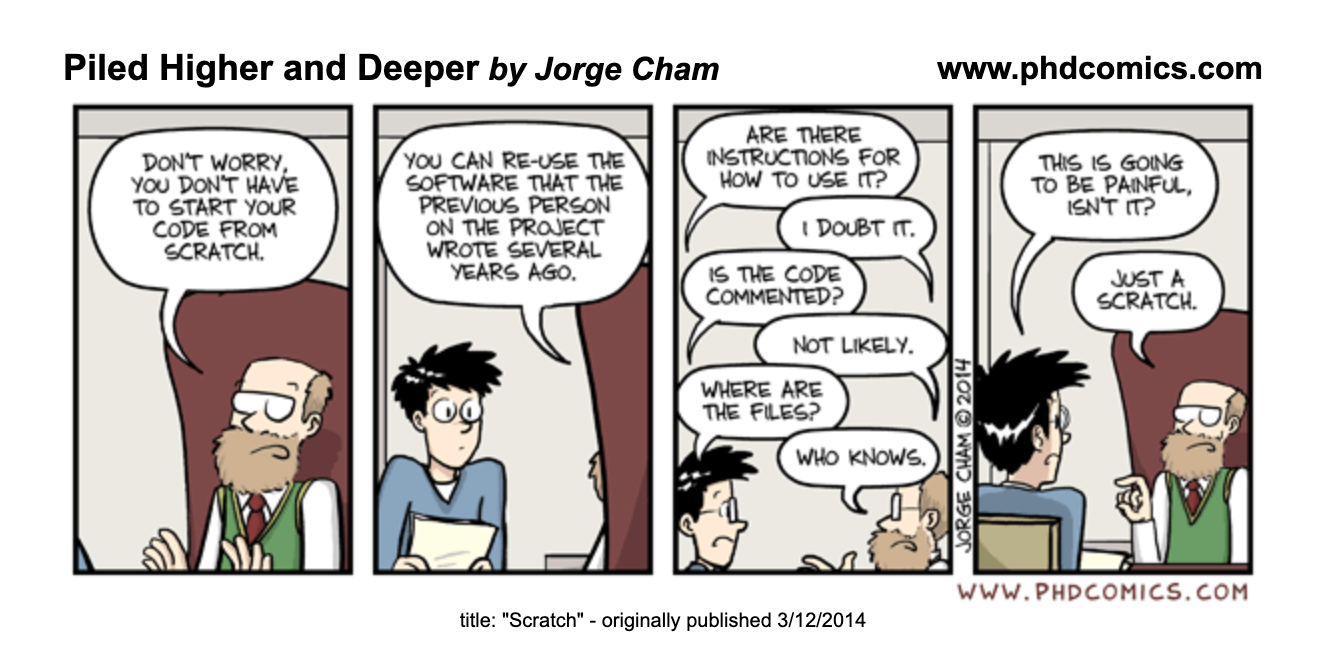

If CR practices make it easy to get set up and run a project, and again, the thing actually works, perhaps the next study can be done more quickly. At the very least, the new project owner will not need to reinvent the wheel in terms of project structure and tooling. Even if it’s just one day per study saved, imagine how that compounds over space and time. I’m sure you’ve either encountered or heard stories of grad students being handed code from their departed predecessors with no instructions on how to run it, no version history, no test suite, etc. Apparently that’s common enough to make a PhD Comic about it:

If you’re convinced of the value of Continuous Reproducibility—or just curious about it—and want help implementing CI/CD/CR practices in your lab, shoot me an email, and I’d be happy to help.

References and recommended resources

- Nicholas Bloom, Charles I Jones, John Van Reenen, and Michael Web (2020). Are Ideas Getting Harder to Find? American Economic Review. 10.1257/aer.20180338

- Brett K Beaulieu-Jones and Casey S Greene (2017). Reproducibility of computational workflows is automated using continuous analysis. Nat Biotechnol. 10.1038/nbt.3780

- Toward a Culture of Computational Reproducibility. https://youtube.com/watch?v=XjW3t-qXAiE

- There is a better way to automate and manage your (fluid) simulations. https://www.youtube.com/watch?v=NGQlSScH97s

-

December 13, 2024

Cloud-based LaTeX collaboration with Calkit and GitHub Codespaces

Research projects often involve producing some sort of LaTeX document, e.g., a conference paper, slide deck, journal article, proposal, or multiple of each. Collaborating on one of these can be painful, though there are web- or cloud-based tools to help, the most popular of which is probably Overleaf. Overleaf is pretty neat, but the free version is quite limited in terms of versioning, collaboration, and offline editing. Most importantly, I feel like it’s only really suited to pure writing projects. Research projects involve writing for sure, but they also involve (often iteratively) collecting and analyzing data, running simulations, creating figures, etc., which are outside Overleaf’s scope.

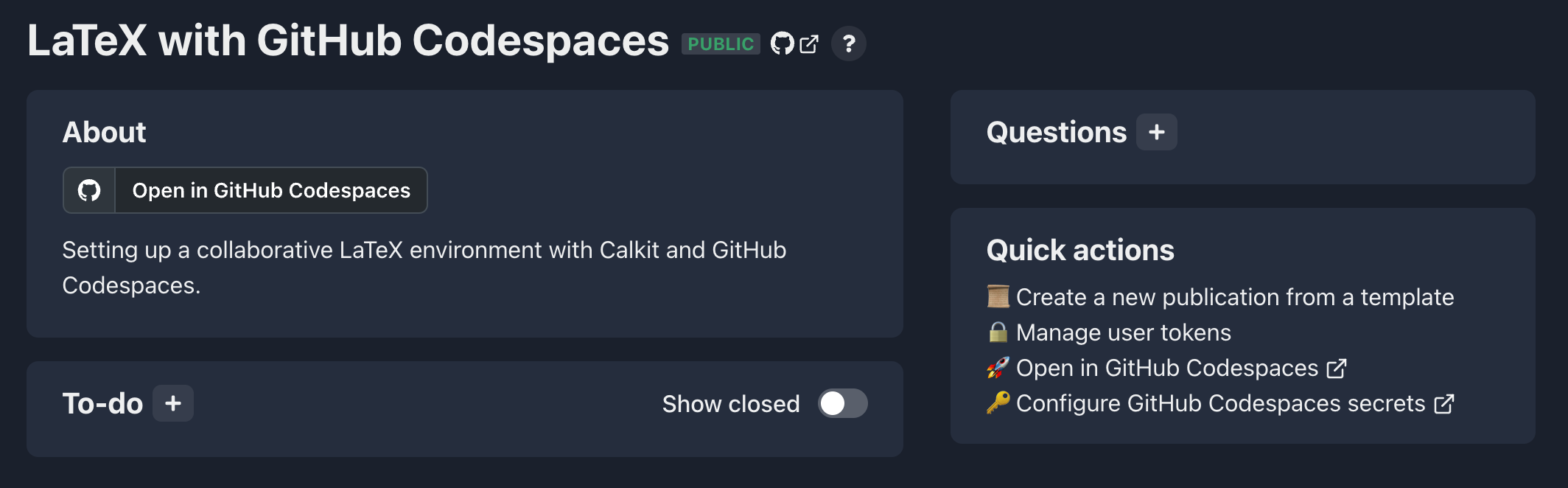

Calkit on the other hand is a research project framework encompassing all of the above, including writing, and is built upon tools that can easily run both in the cloud and locally, on- or offline, for maximum flexibility. Here we’re going to focus doing everything in a web browser though. We’ll set up a collaborative LaTeX editing environment with Calkit and GitHub Codespaces, a container-based virtual machine service.

Disclosure: There is a paid aspect of the Calkit Cloud, which I manage, to help with the costs of running the system, and to prevent users for pushing up unreasonable amounts of data. However, the software is open source and there is a free plan that provides more than enough storage to do what we’ll do here.

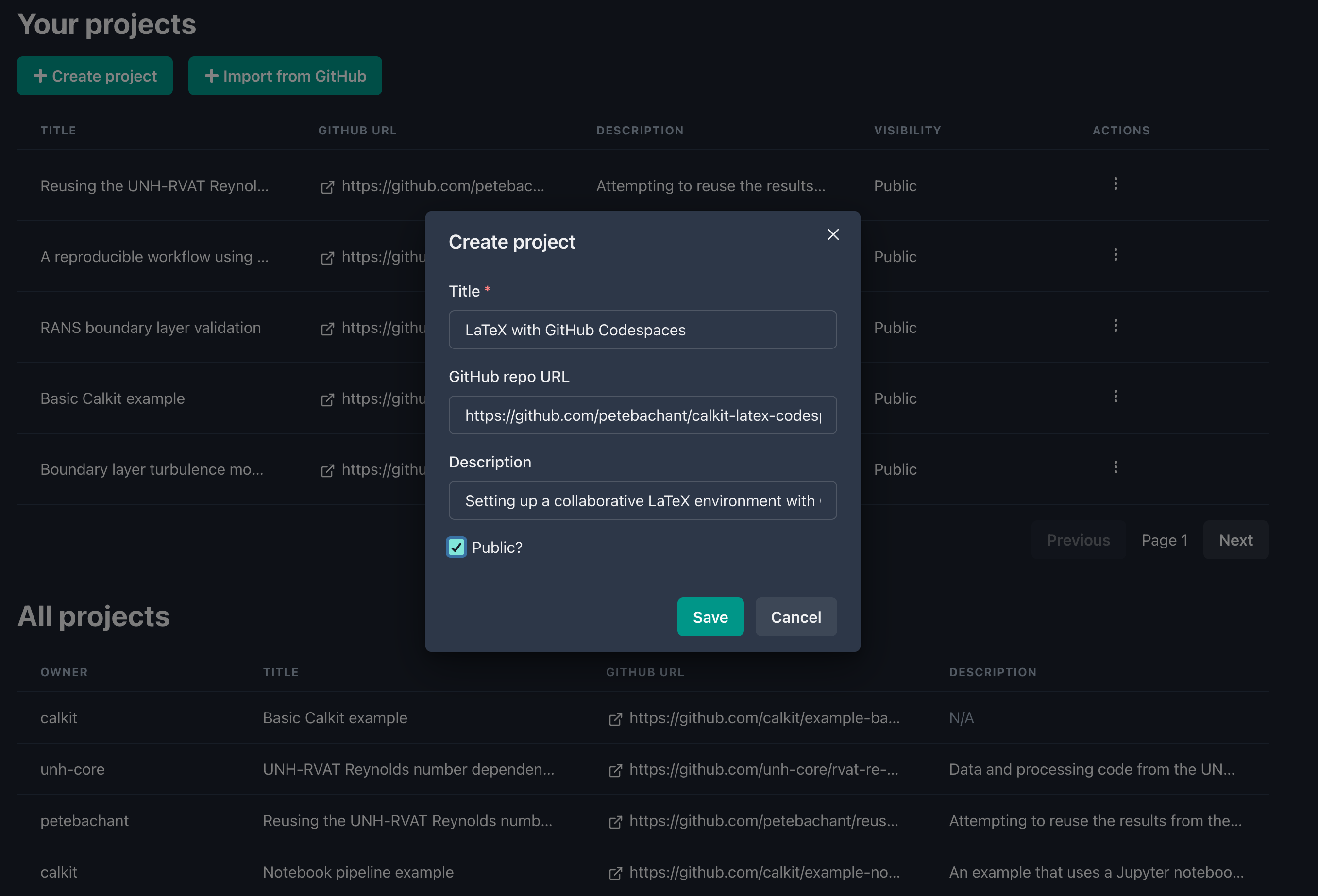

Create the project

In order to follow along, you’ll need a GitHub account, so if you don’t have one, sign up for free. Then head to calkit.io, sign in with GitHub, and click the “create project” button. Upon submitting, Calkit will create a new GitHub repository for us, setup DVC (Data Version Control) inside it, and create a so-called “dev container” configuration from which we can spin up our GitHub Codespace and start working.

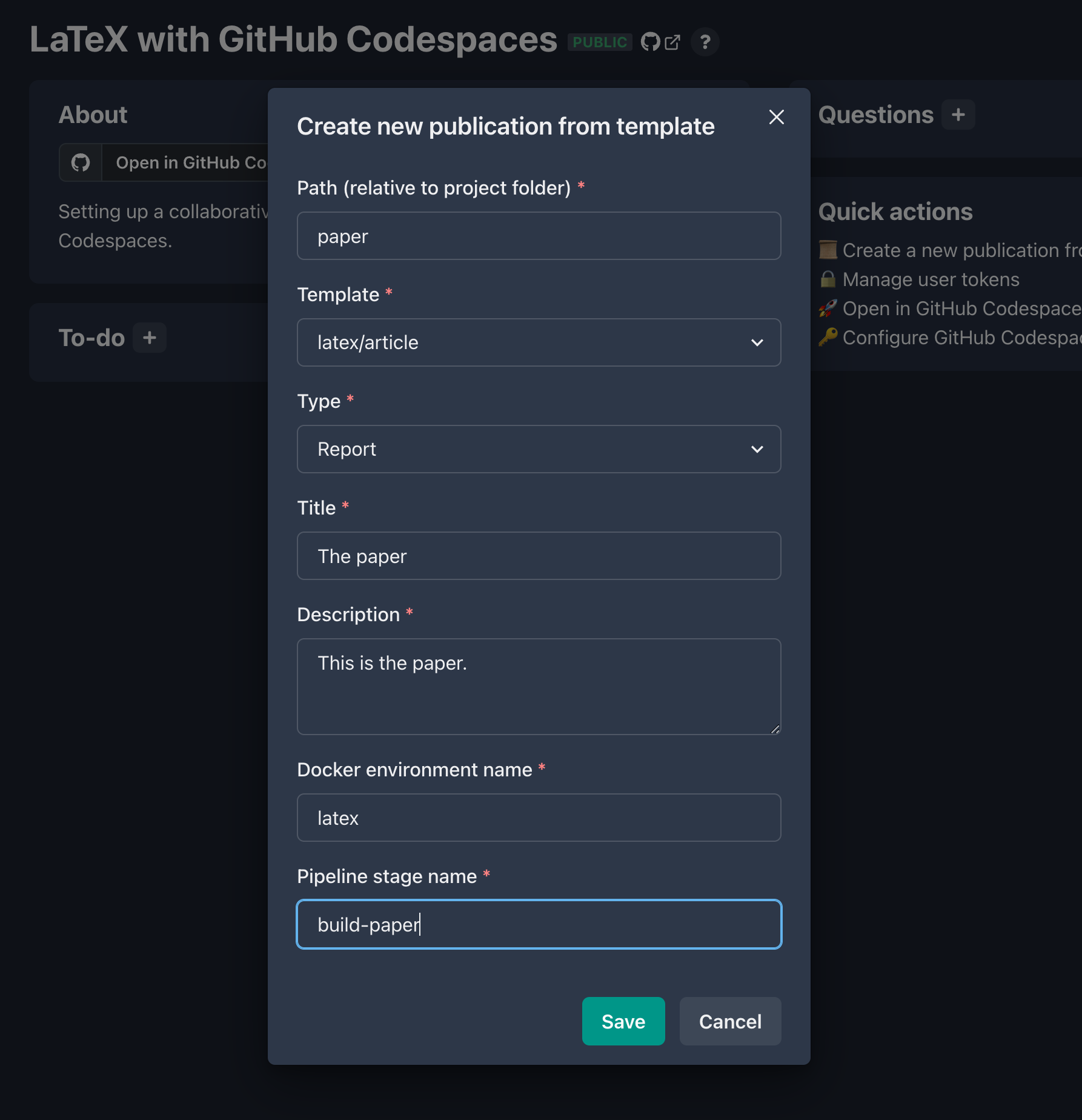

Add a new publication to the project

Click the quick action link on the project homepage to “create a new publication from a template.” In the dialog, select the

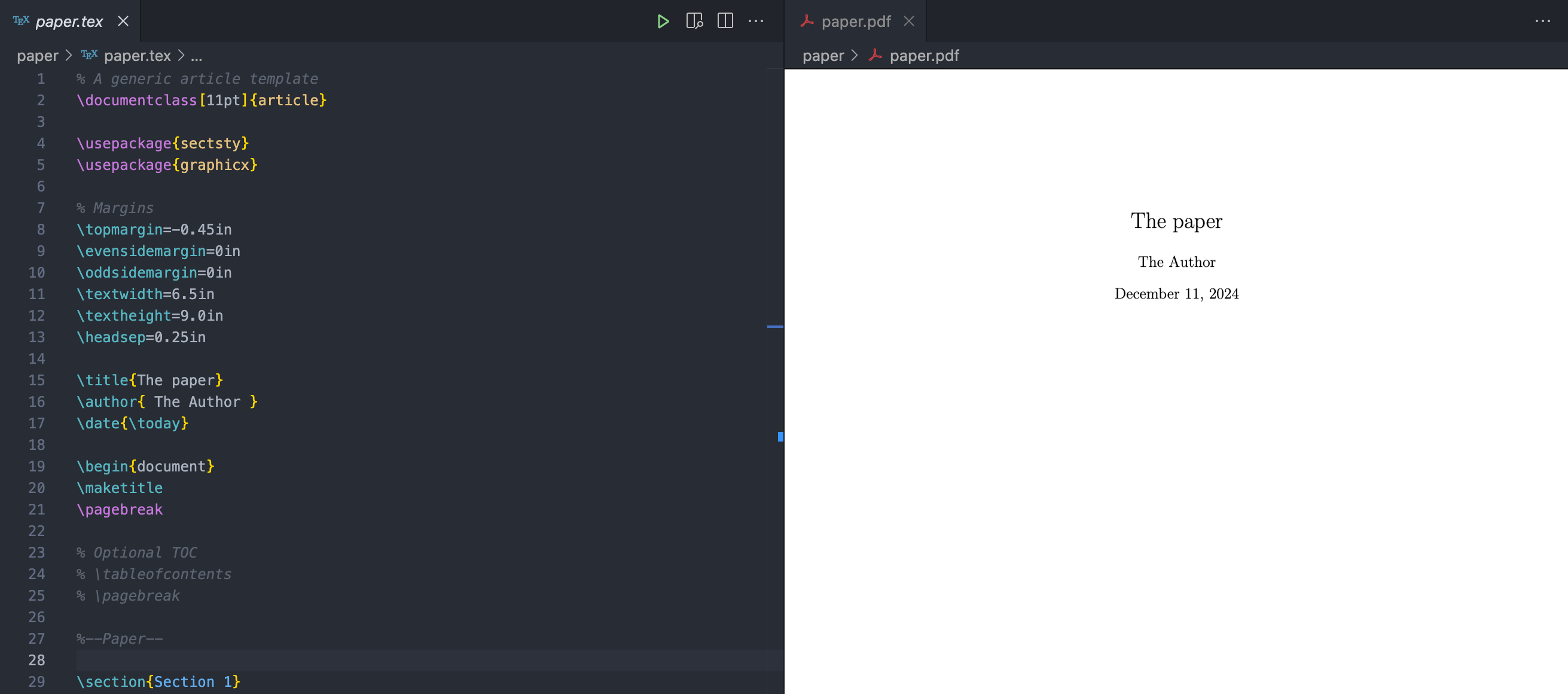

latex/articletemplate, and fill in the rest of the required information. This will add a LaTeX article to our repo and a build stage to our DVC pipeline, which will automatically create a TeX Live Docker environment to build the document. Here we’ll create the document in a new folder calledpaper:

Keep in mind that you’ll be able add different LaTeX style and config files later on if the generic article template doesn’t suit your needs. Also, if you have suggestions for templates you think should be included, drop a note in a new GitHub issue.

Create the Codespace

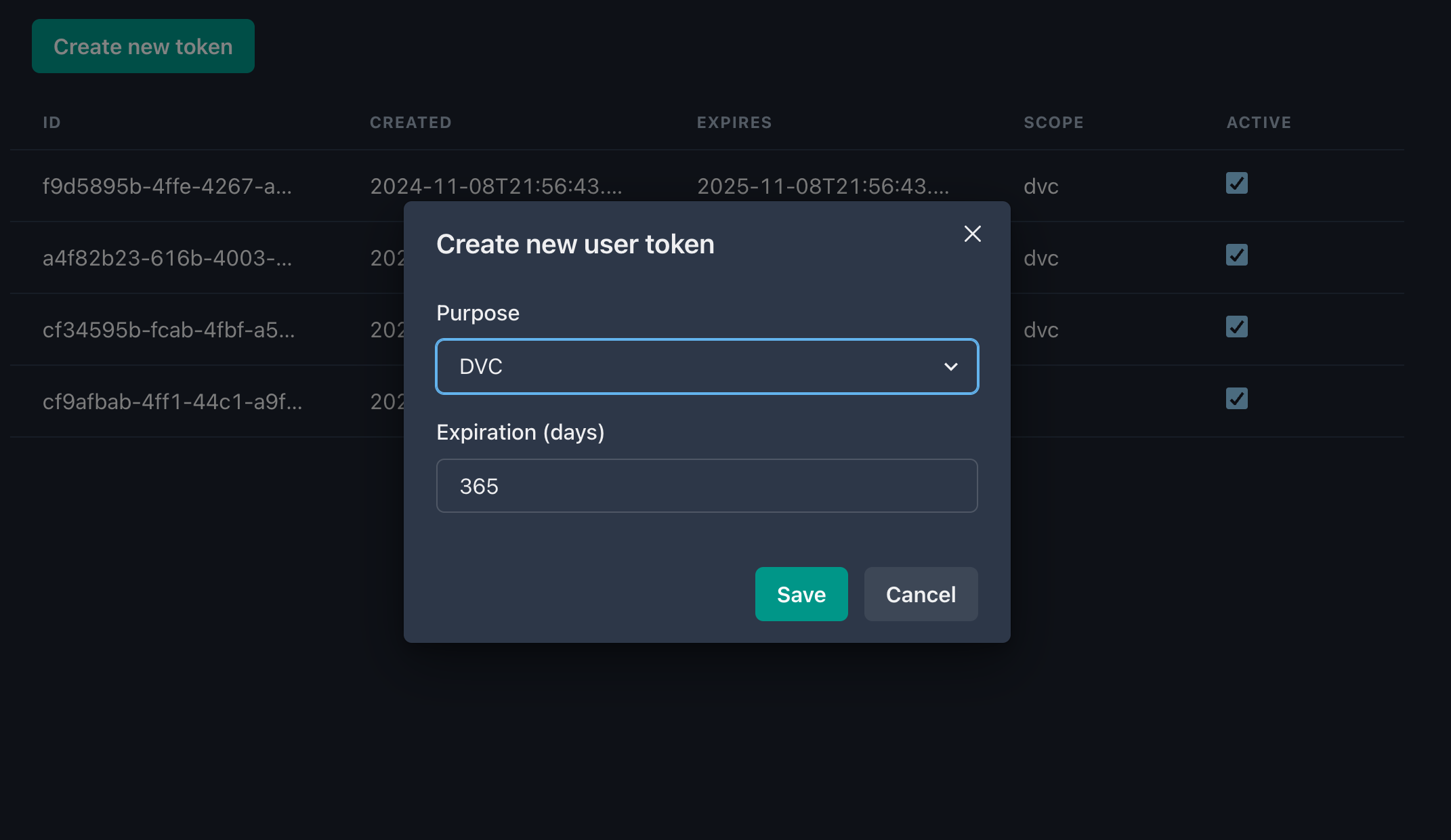

In order to push artifacts like PDFs up to the Calkit Cloud’s DVC remote, we will need a token and we’ll need to set it as a secret for the Codespace. On the Calkit project homepage you’ll see a link in the quick actions section for managing user tokens.

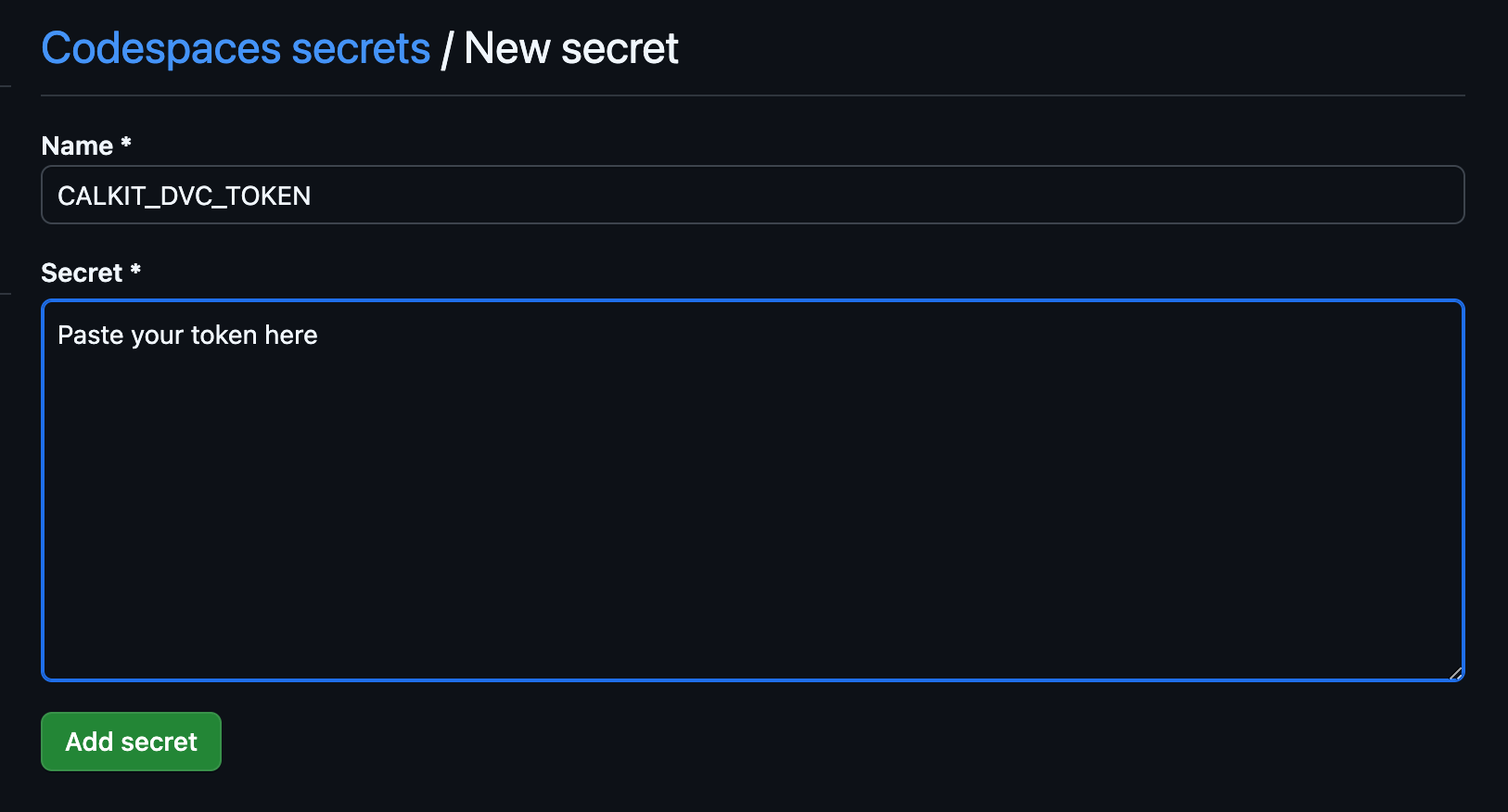

Head over there and create one, selecting “DVC” as the purpose. Save this in a password manager if you have one, then head back to the project homepage and click the quick action link to configure GitHub Codespaces secrets for the project. Create a secret called

CALKIT_DVC_TOKENand paste in the token.

Next, from the project homepage, click “Open in GitHub Codespaces.” Alternatively, if you haven’t created your own project, you can create your own Codespace in mine.

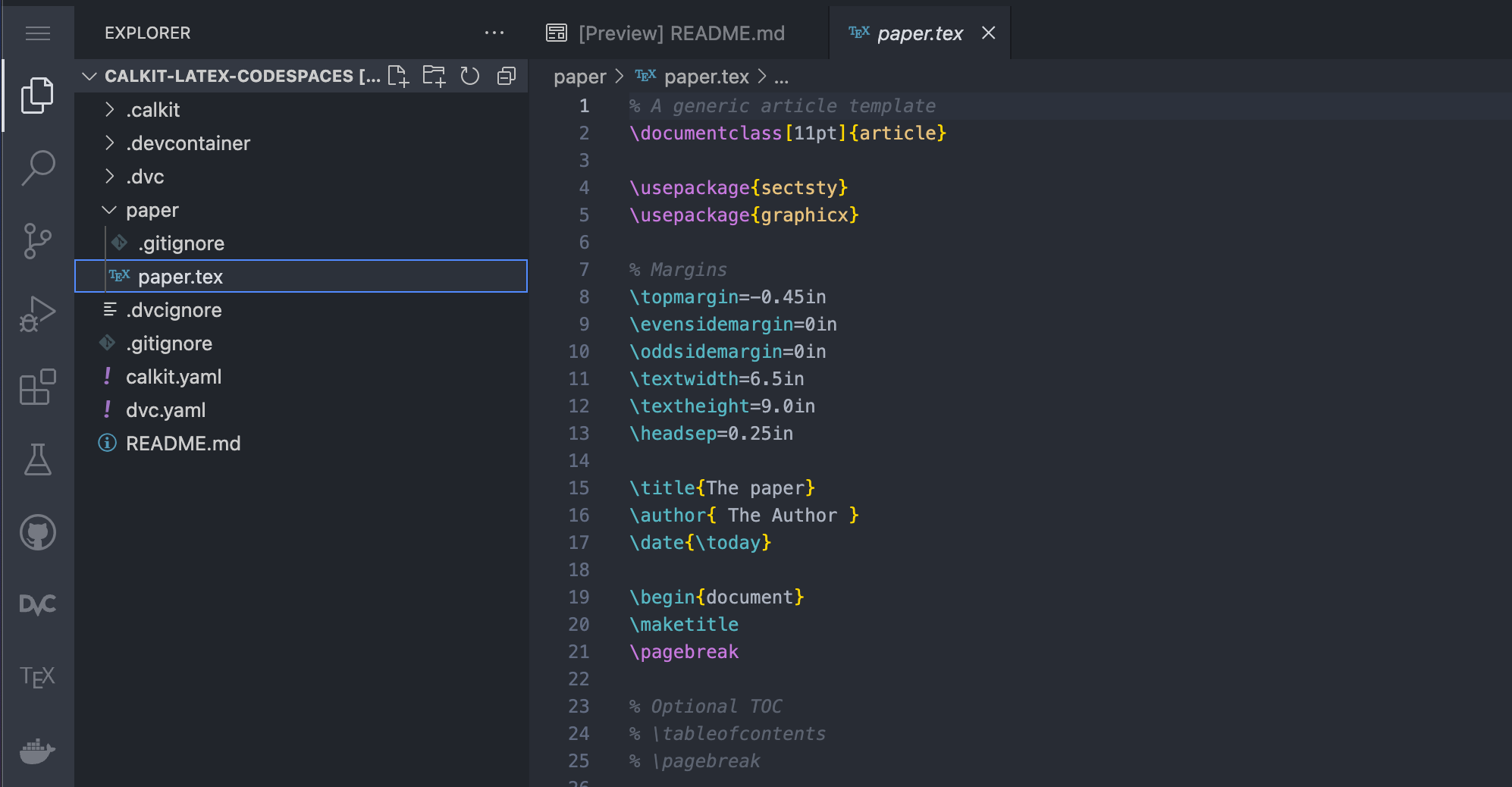

Once created, we’ll see an in-browser Visual Studio Code (VS Code) editor, which will have access to our project repository and will be able to compile the LaTeX document. Consider this your very own Linux virtual machine in the cloud for working on this project. You can update settings, add extensions, etc. You have total control over it. Note that GitHub does charge for Codespaces, but the free plan limits are reasonably generous. It’s also fairly easy to run the same dev container configuration locally in in VS Code.

It might take few minutes to start up the first time as the Codespace is created, so go grab a coffee or take the dog for a walk.

Edit and build the document

After the Codespace is built and ready, we can open up

paper/paper.texand start writing.

If you look in the upper right hand corner of the editor panel, you’ll see a play button icon added by the LaTeX Workshop extension. Clicking that will rebuild the document. Just to the right of that button is one that will open the PDF in split window, which will refresh on each build.

Note that the play button will run the entire pipeline (like calling

calkit runfrom a terminal,) not just the paper build stage, so we can add more stages later, e.g., for creating figures, or even another LaTeX document, and everything will be kept up-to-date as needed. This is a major difference between this workflow and that of a typical LaTeX editor, i.e., that the entire project is treated holistically. So for example, there’s no need to worry about if you forgot to rerun the paper build after tweaking a figure—it’s all one pipeline. See this project for an example, and check out the DVC stage documentation for more details on how to build and manage a pipeline.Break lines in a Git-friendly way

This advice is not unique to cloud-based editing, but it’s worth mentioning anyway. When writing documents that will be versioned with Git, make sure to break lines properly by splitting them at punctuation or otherwise breaking into one logical phrase per line. This will help when viewing differences between versions and proposed changes from collaborators. If you write paragraphs as one long line and let them “soft wrap,” it will be a little more difficult.

So, instead of writing something like:

This is a very nice paragraph. It consists of many sentences, which make up the paragraph.write:

This is a very nice paragraph. It consists of many sentences, which make up the paragraph.The compiled document will look the same.

Commit and sync changes

For better or for worse, working with Git/GitHub is different from other systems like Google Docs, Overleaf, or Dropbox. Rather than syncing our files automatically, we need to deliberately “commit” changes to create a snapshot and then sync or “push” them to the cloud. This can be a stumbling block when first getting started, but one major benefit is that it makes one stop and think about how to describe a given set of changes. Another benefit is that every snapshot will be available forever, so if you create lots of them, you’ll never lose work. In a weird mood and ruined a paragraph that read well yesterday? Easy fix—just revert the changes.

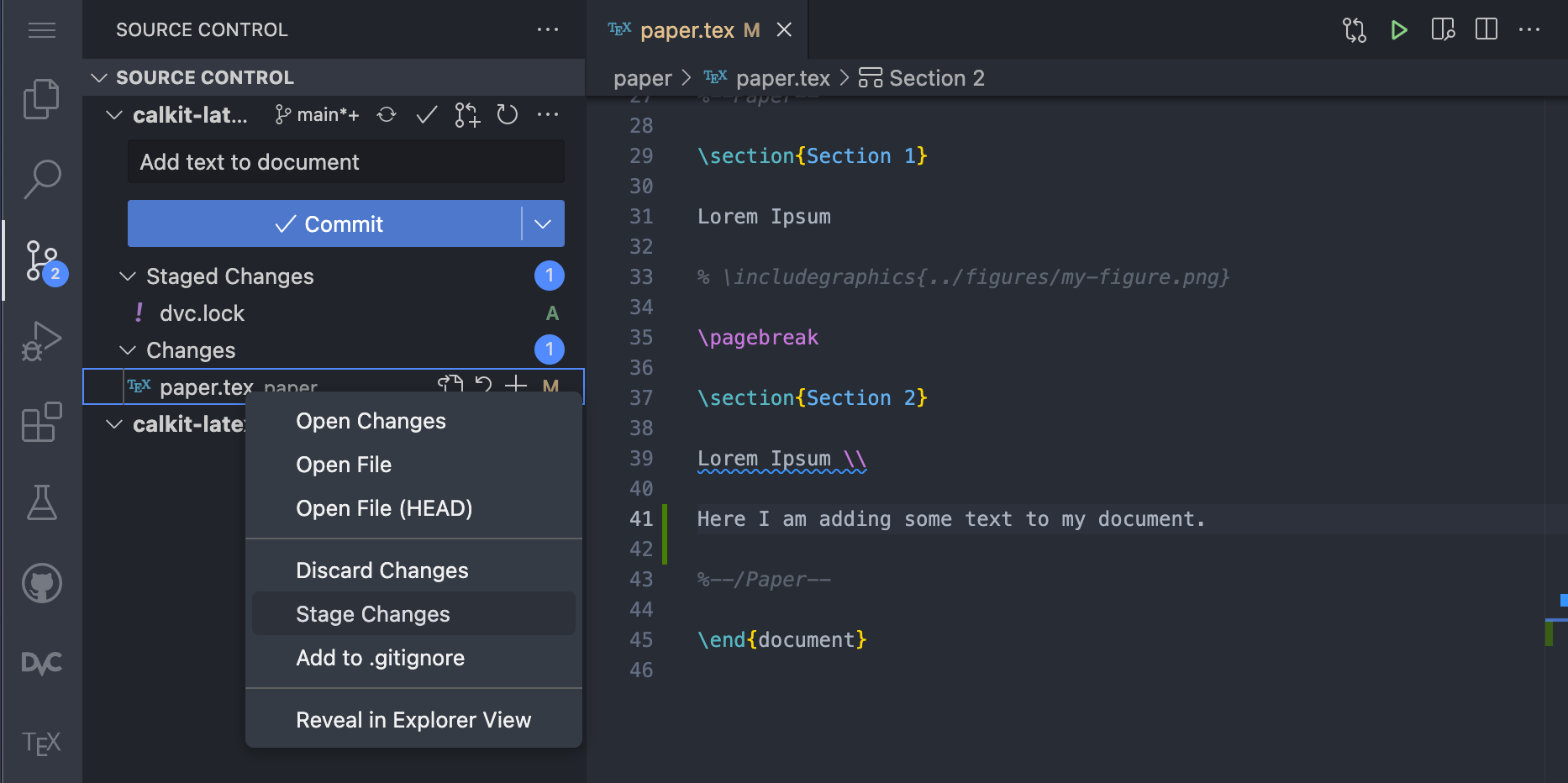

The VS Code interface has a built-in graphical tool for working with Git and GitHub, which can make things a little easier compared to learning the command-line interface (CLI.) If we make some changes to

paper.tex, we can see a blue notification dot next to the source control icon in the left sidebar. In this view we can see there are two files that have been changed,paper.texanddvc.lock, the latter of which is a file DVC creates to keep track of the pipeline, and shows up in the “Staged Changes” list, a list of files that would be added to a snapshot if we were to create a commit right now.We want to save the changes both this file and

paper.texin one commit, so let’s stage the changes topaper.tex, write a commit message, and click commit.

After committing we’ll see a button to sync the changes with the cloud, which we can go ahead and click. This will first pull from and then push our commits up to GitHub, which our collaborators will then be able to pull into their own workspaces.

Push the PDF to the cloud

The default behavior of DVC is to not save pipeline outputs like our compiled PDF to Git, but instead commit them to DVC, since Git is not particularly good at handling large and/or binary files. The Calkit Cloud serves as a “DVC remote” for us to push these artifacts to back them up and make them available to others with access to the project.

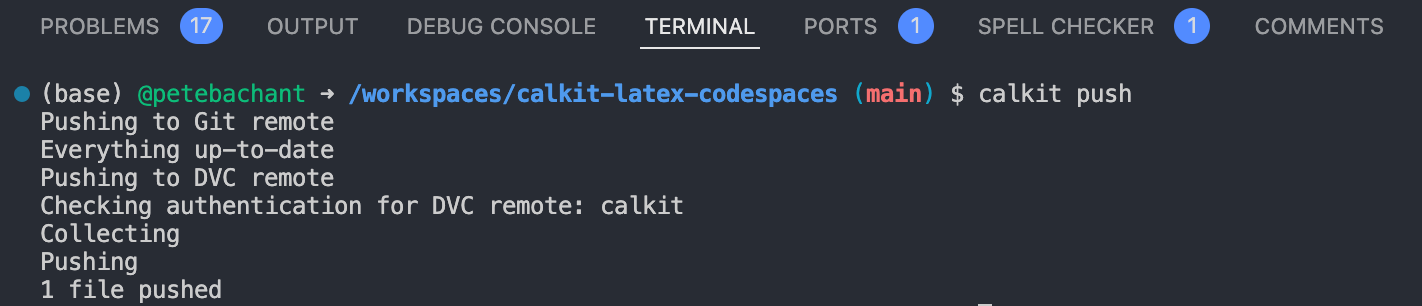

If we go down to the terminal and run

calkit push, we’ll push our DVC artifacts (just the PDF at this point) up to the cloud as well, which will make our PDF visible in the publications section of the project homepage. Note thatcalkit pushwill also send the Git changes to GitHub, completely backing up the project.

Later on, if you end up adding things like large data files for analysis, or even photos and videos from an experiment, these can also be versioned with DVC and backed up in the cloud.

Collaborate concurrently

What we’ve seen so far is mostly an individual’s workflow. But what if we have multiple people working on the document at the same time? Other cloud-based systems like Google Docs and Overleaf allow multiple users to edit a file simultaneously, continuously saving behind the scenes. My personal opinion is that concurrent collaborative editing is usually not that helpful, at least not on the same paragraph(s). However, if you really like the Google Docs experience, you can setup the Codespace for live collaboration. Otherwise, collaborators can create their own Codespaces from the same configuration just like we created ours.

Git is actually quite good at automatically merging changes together, but typically you’ll want to make sure no two people are working on the same lines of text at the same time. You’ll need to communicate a little more with your collaborators so you don’t step on each other’s toes and end up with merge conflicts, which require manual fixes. You could simply send your team a Slack message letting them know you’re working on the doc, or a given section, and avoid conflicts that way. You could split up the work by paragraph or section, and even use LaTeX

\inputcommands in the main.texfile to allow each collaborator to work on their own file.Git can also create different branches of the repo in order to merge them together at a later time, optionally via GitHub pull requests, which can allow the team to review proposed changes before they’re incorporated. However, for many projects, it will be easier to have all collaborators simply commit to the main branch and continue to clean things up as you go. If commits are kept small with descriptive messages, this will be even easier. Also, be sure to run

git pulloften, either from the UI or from the terminal, so you don’t miss out on others’ changes.Manage the project with GitHub issues

Another important aspect of collaborative writing is reviewing and discussing the work. I recommend using GitHub issues as a place to create to-do items or tasks and discuss them, which is particularly helpful for team members who are mostly reviewing rather thant writing.

One approach to creating issues is to Download the latest PDF of the document, add comments, and attach the marked up PDF to a new GitHub issue. A variant of this is printing it out and scanning the version with red pen all over it.

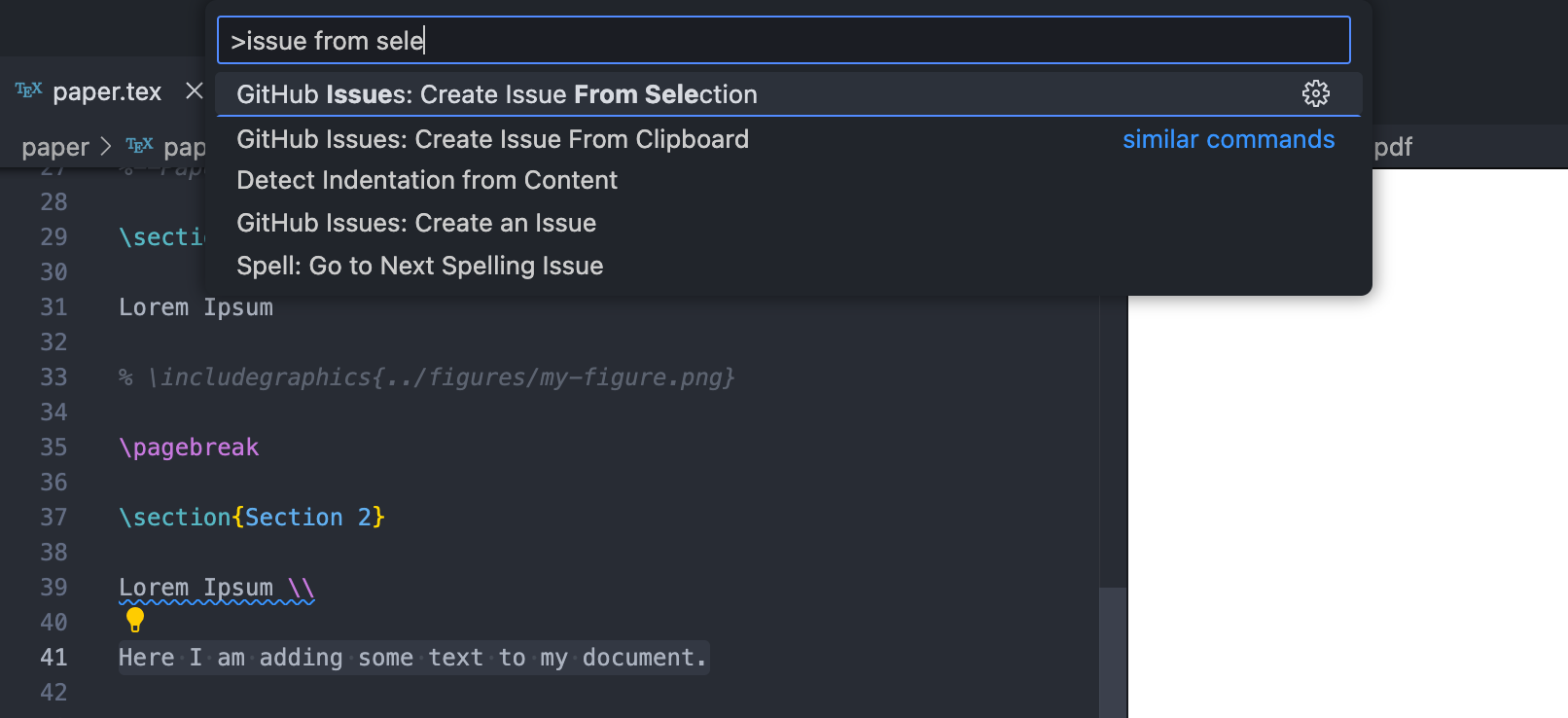

Another approach is to create issues from within VS Code. In the

.texfile, you can highlight some text and create a GitHub issue from it with the “Create Issue From Selection” command. Open up the command palette withctrl/cmd+shift+pand start typing “issue from selection”. The command should show up at the top of the list.

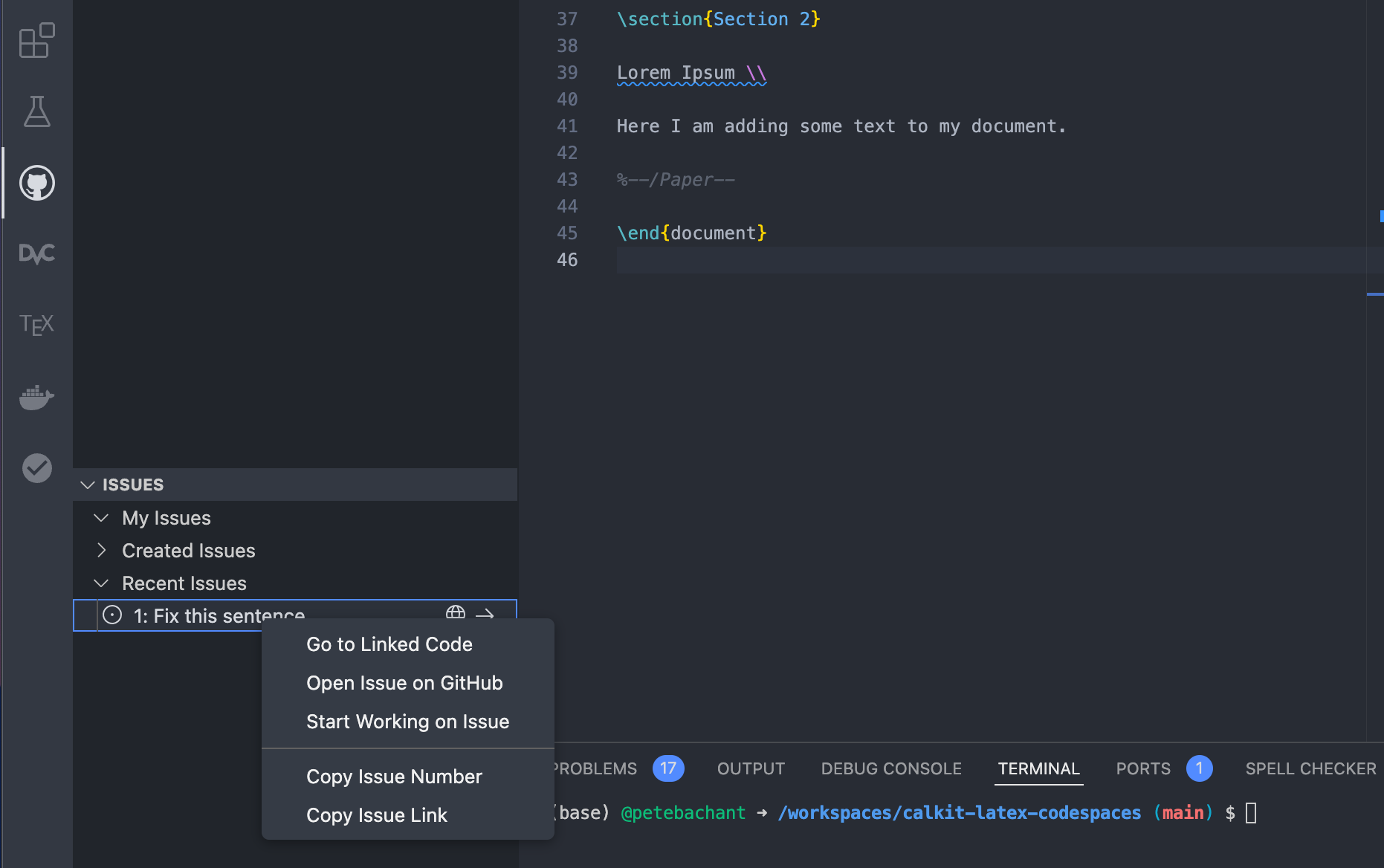

After you create a new issue, click the GitHub icon in the left pane and look through the recent issues. You can right click on an issue and select “Go to Linked Code” to do just that.

If you make a commit that addresses a given issue, you can include “fixes #5” or “resolves #5” in the commit message, referencing the issue number, and GitHub will automatically close it.

For complex cases with lots of tasks and team members, GitHub projects is a nice solution, allowing you to put your tasks into a Kanban board or table, prioritize them, assess their effort level, and more. Also note that these GitHub issues will also show up in the “To-do” section on the Calkit project homepage, and can be created and closed from there as well.

Conclusions

Here we set up a way to collaborate on a LaTeX document in the cloud using GitHub Codespaces. The process was a little more involved compared to using a dedicated LaTeX web app like Overleaf, but our assumption was that this document is part of a larger research project that involves more than just writing. Because the document is integrated into a Calkit project, it is built as a stage in a DVC pipeline, which can later be extended to include other computing tasks like creating datasets, processing them, making figures, and more.

We also went over some tactics to help with version control, concurrent editing, and project management. Though we did everything in a web browser, this setup is totally portable. We’ll be able to work equally well locally as we can in the cloud, allowing anyone to reproduce the outputs anywhere.

Have you struggled to collaborate on LaTeX documents with your team before? I’d be interested to hear your story, so feel free to send me an email.